According to an open-access Editor’s Choice article in ARRS’ American Journal of Roentgenology (AJR), convolutional neural networks (CNN) trained to identify abnormalities on upper extremity radiographs are susceptible to a ubiquitous confounding image feature that could limit their clinical utility: radiograph labels.

“We recommend that such potential image confounders be collected when possible during dataset curation, and that covering these labels be considered during CNN training,” wrote corresponding author Paul H. Yi from the University of Maryland’s Medical Intelligent Imaging Center in Baltimore.

Yi and team’s retrospective study evaluated 40,561 upper extremity musculoskeletal radiographs from Stanford’s MURA dataset that were used to train three DenseNet-121 CNN classifiers. Three inputs were used to distinguish normal from abnormal radiographs: original images with both anatomy and labels; images with laterality and/or technologist labels subsequently covered by a black box; images where anatomy had been removed and only labels remained.

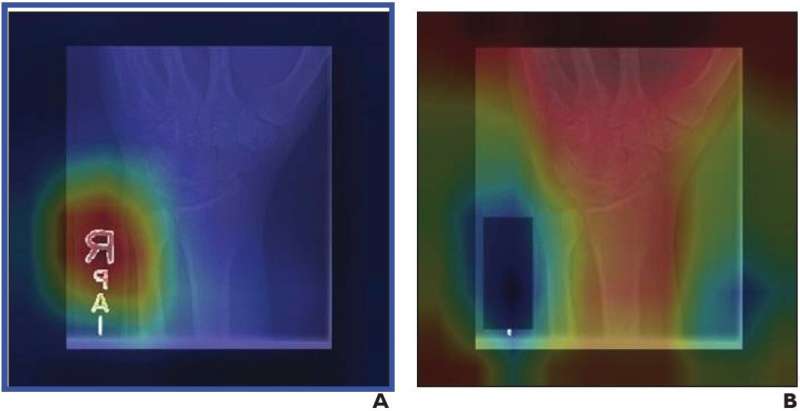

For the original radiographs, AUC was 0.844, frequently emphasizing laterality and/or technologist labels for decision-making. Covering these labels increased AUC to 0.857 (p=.02) and redirected CNN attention from the labels to the bones. Using labels alone, AUC was 0.638, indicating that radiograph labels are associated with abnormal examinations.

Source: Read Full Article